Achieving AI Safety Through Ethical Implementation

Artificial Intelligence (AI) has rapidly evolved, presenting opportunities, risks, and a need for AI safety measures in the workplace. AI safety is a comprehensive concept that plays a pivotal role in shaping the responsible development of these systems by focusing on the human elements of ethics, fairness, transparency, and more. In this article, we’ll discuss how ethical considerations and data privacy guidelines provide the compass for safely navigating the use of AI systems in your workplace.

The Significance of AI Safety

According to the 2023 State of the CIO report, 26% of IT leaders highlight machine learning and artificial intelligence as the key focus areas for IT investment. With a growing reliance on AI technology, it is important to recognize the need for a human-centric approach to place values and ethics at the forefront of technological advancements. This means emphasizing the use of AI systems in the workplace with a primary focus on meeting the needs and well-being of humans, with a priority on fair data collection, data privacy, and compliance with regulations.

AI safety aims to create technology that serves humanity rather than systems that may have unintended negative consequences, such as the unsafe transmission of sensitive data, unethical decisions or sharing incorrect information based on AI research, unfair biases in AI models, a lack of compliance with legal data regulations like GDPR or HIPAA, or the failure/malfunction of AI systems. Robust testing, continuous monitoring, and the implementation of fail-safe mechanisms are essential to enhance the reliability of AI systems, promoting their safe integration into various aspects of your workplace.

Promoting Data Privacy & Legal Compliance

One significant concern arises from the vast amounts of data processed by AI systems, including personally identifiable information (PII) and sensitive details. If not handled carefully, AI projects can compromise data privacy in several ways. AI projects often involve large datasets that, if not securely stored, may become targets for bad actors seeking unauthorized access. Workplaces must ensure that personal and sensitive data used in AI systems is securely stored and anonymized. Implementing encryption protocols is one of the best ways to safeguard data during storage and transmission in order to privacy breaches of sensitive company, employee, or customer data.

In recent months, the United States and the United Kingdom have started making progress in the government supervision of AI research capabilities, emphasizing initiatives to expand access to powerful supercomputers essential for training AI systems. On Oct. 30, US President Joe Biden signed the country's inaugural AI executive order, issuing a comprehensive set of directives for federal agencies to govern the utilization of AI and establish safeguards for the technology.

Although very few laws regarding the use of AI have actually been put into place, companies must remain in compliance with data privacy legislation like HIPAA and GDPR whenever an AI system handles personal data. Companies should also clarify who is responsible and legally liable for AI system errors or failures. In some cases, developers and manufacturers of AI systems may be held responsible for negative outcomes, but operators or users could also be held liable in cases where human oversight is required for the AI system's proper functioning. This is why employers that heavily rely on AI systems must have clear usage guidelines and may consider obtaining liability insurance for these projects to mitigate financial risks.

Avoiding Bias in AI Models

The deployment of AI systems can easily present ethical dilemmas and biased decisions because of the lack of human input. For example, in August 2023, iTutorGroup settled a $365,000 lawsuit with the EEOC, accused of using AI-powered recruiting software that automatically rejected female applicants aged 55 and older and male applicants aged 60 and older. Over 200 qualified applicants were affected by this age-biased model of hiring. The same types of bias could occur with nearly any type of protected characteristic, so it is essential to regularly audit AI models for bias and take corrective actions when biases are identified.

Another study published in Science revealed that a healthcare risk-prediction algorithm, widely used on over 200 million people in the U.S., exhibited racial bias due to a flawed metric for determining medical needs. The algorithm, designed to identify patients suitable for "high-risk care management" programs, relied on healthcare spending as a proxy for medical requirements. However, this proxy did not work effectively, particularly for black patients who, despite spending the same amount as white patients, had different levels of medical need. Consequently, black patients received lower risk scores, impacting their eligibility for high-risk care management. The study underscored the unintended biases embedded in algorithmic decision-making processes.

Bias also goes beyond just the way that AI and algorithms work, affecting social and economic equity due to access. AI technologies must be accessible to all, regardless of socioeconomic status or education level, to not exacerbate existing inequalities. As workplaces expand the use of AI, proper education, software, and devices must be provided to all employees in order to reduce potential bias. Including a wide range of diverse perspectives when developing any AI models or strategies can also help reduce bias.

Ethical Frameworks & Human Review in AI Projects

Companies should develop and adhere to established ethical frameworks that guide the development of AI projects and may consider forming an ethical review committee to evaluate and advise on AI systems. Working off of a workplace code of conduct, the ethical review committee or company leadership can establish clear ethical principles and guidelines for employee use of AI. One of the most important parts of these guidelines is to include a human component in the AI process, with a focus on human verification of information rather than only relying on machine learning.

A lack of human review in AI research can easily result in the sharing of incorrect information or even nonexistent events. For example, in May 2023, attorney Steven A. Schwartz submitted a legal brief that was prepared using ChatGPT, which included six fabricated cases with false information and citations. Schwartz and Partner Peter LoDuca received a $5,000 fine for not confirming the sources before submission, and the lawsuit itself was later dismissed.

In another situation, Gannett newspaper network used a tool called LedeAI to write headlines for local markets like the Louisville Courier-Journal, AZ Central, Florida Today, and the Milwaukee Journal Sentinel. One viral headline that went unchecked by any humans read, “The Worthington Christian [[WINNING_TEAM_MASCOT]] defeated the Westerville North [[LOSING_TEAM_MASCOT]] 2-1 in an Ohio boys soccer game on Saturday.” Cases like this can help shed light on the tangible impact of requiring a human component in the use of AI technologies.

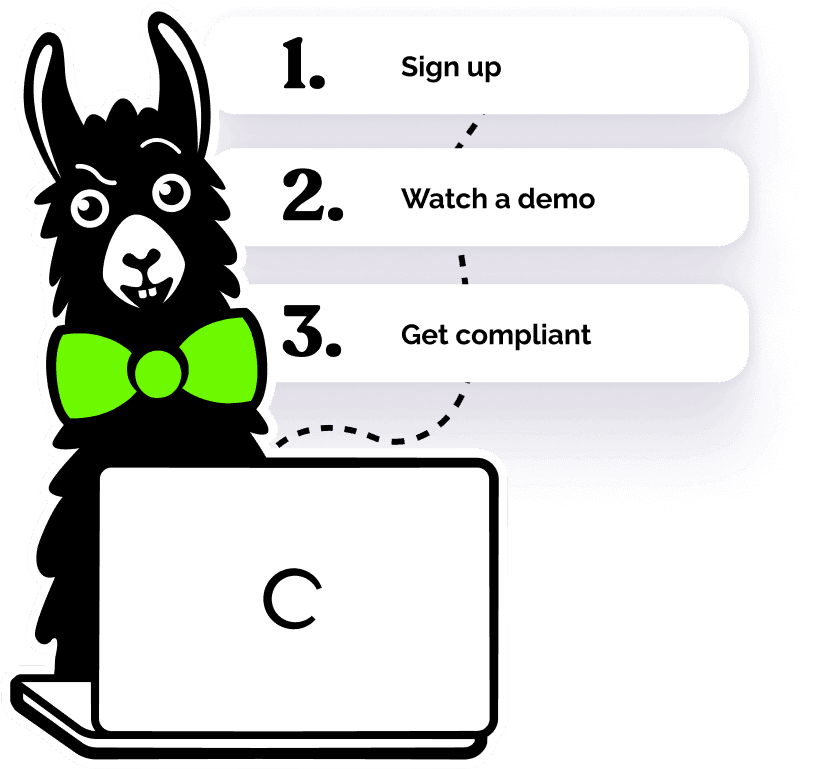

Training Employees on AI Safety

As you seek to implement AI technologies into your company’s workflow, EasyLlama is here to help train you and your employees on data privacy requirements and AI safety. Our modern and engaging courses provide the latest trends and legislation for a holistic and proactive approach to AI safety. We integrate ethical considerations into our interactive quizzes and real-life scenarios to teach your employees how to use AI safely and effectively — boosting your company’s productivity and technological prowess. Access your free course preview to learn more about EasyLlama’s tech training suite!